LLM Task Force

** Warning: ** This is a rough draft, and I’m sharing it with you after just 3 hours of brainstorming and designing. It’s not finished, and I’d love your feedback to help me refine this concept. Don’t worry, I won’t get offended if you point out the flaws – I’m a comedian, not a fragile ego!

Imagine a world where tasks are executed with precision, speed, and accuracy, freeing up valuable time for innovation, creativity, and binge-watching your favorite shows. Welcome to the future of task management, where Large Language Models (LLMs) take center stage in a distributed system that’s about to transform the way we work – or at least, that’s the plan!

As an innovative electrical engineer and part-time stand-up comedian, I’m thrilled to introduce a novel distributed LLM system structure that’s poised to revolutionize task management across industries. This game-changing architecture combines the strengths of LLMs with the power of distributed computing, creating a seamless and efficient task management ecosystem – or as I like to call it, “Task-topia”!

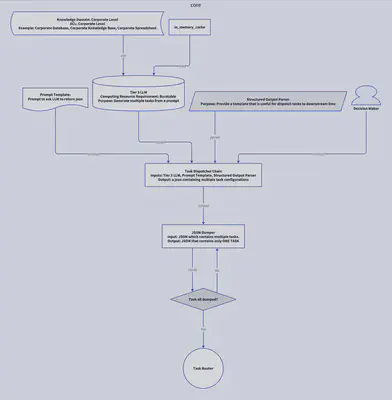

The Core of Innovation (or Where the Magic Happens)

Technology Department: The Engine of Efficiency (or Where the Nerds Live)

Analyst CoPilot Chains: Empowering Experts (or Where the Cool Kids Hang Out) Each analyst is supported by a Tier 1 LLM, which provides expertise-specific knowledge and resources. Think of it as having a personal “task butler” who’s always got your back. The Analyst CoPilot Chains, comprising tools and resources, enable analysts to work efficiently and effectively, leveraging the power of LLMs to augment their expertise – or as I like to call it, “task-steroids”!

The Future of Task Management (or Where We’re Headed) This distributed LLM system structure offers a glimpse into the future of task management, where:

- Tasks are executed with precision and speed, freeing up time for innovation, creativity, and cat videos.

- Collaboration is seamless, with departments and teams working together effortlessly – or at least, that’s the plan!

- Productivity soars, as LLMs take on routine tasks, enabling experts to focus on high-value tasks – or as I like to call it, “task-nirvana”!

- Decision-making is informed, with data-driven insights and analytics guiding decision-makers – or as I like to call it, “task-vidence”!

Here is a rough sketch of a sequence diagram that shows how the system works in Department Level when a task is initiated by Department Head:

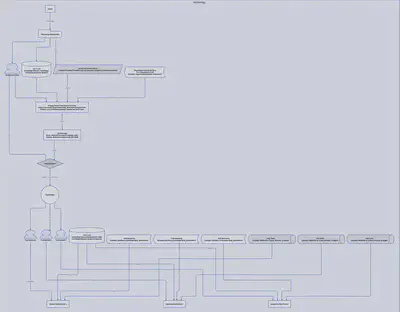

Now that you have already seen the rough sketch of the system in the department level, let’s take a look at the system in the Team Level when a task is initiated by the Team Lead:

You would have noticed that the system is designed to be very similar at both the Department and Team levels. This is to ensure that the system is scalable and can be easily extended to other levels of the organization.

Okay, how about we take a look at the system in the Analyst Level when a task is initiated by the Analyst:

Your Feedback is Welcome! (or Where You Get to Roast Me) This is just the beginning, and I’d love your input to help me refine this concept. What do you think about this distributed LLM system structure? Are there any areas you’d like me to explore further? Share your thoughts in the comments below, and don’t hold back – I can take it!